Dealing with Extreme Cardinality Joins

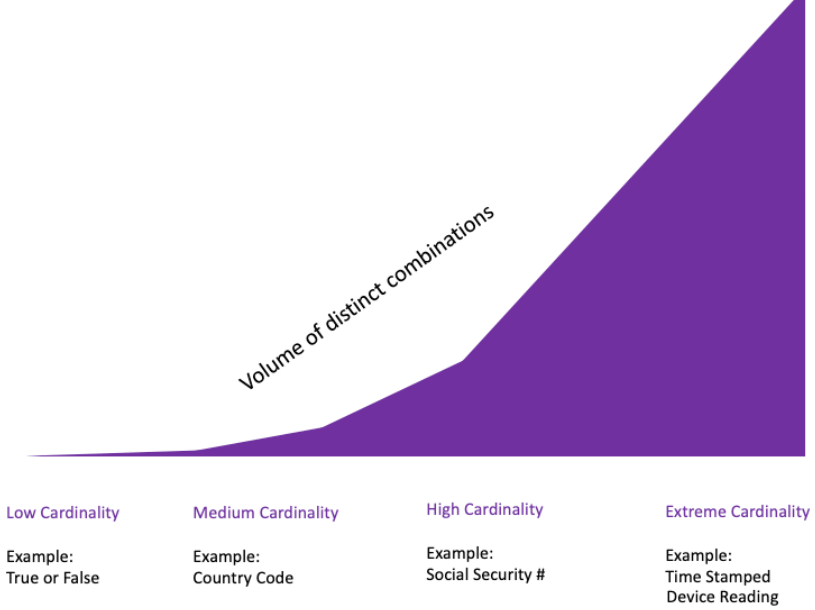

High cardinality data can be more difficult to efficiently analyze because many unique elements increase the computational cost for analysis, and make it more challenging to identify useful insights from the data.

Cardinality refers to the number of unique elements in a set. For instance, low cardinality data sets include the names of a few countries, or data on the weather where there are only a few distinct values (such as sunny, cloudy, rainy, etc.), or data on the ages of a group of people where there are only a few distinct age groups. Historically, an example of high cardinality data might be a social security number or a driver’s license number where the number of unique identifiers number in the hundreds of millions. Today, the prevalence of digital devices and the internet has made it possible for people and machines to generate vast amounts of high cardinality data through their online activities that result in unique combinations of device IDs, timestamps, and geo-coding. This has resulted in many digital organizations being faced with analyzing unique events measuring in the hundreds of billions.

Joining two tables on an extreme cardinality column can be computationally intensive because it involves comparing each value in one table to each value in the other table. For example, if Table A has 100,000 unique values and Table B has 50,000,000 unique values, then the total number of comparisons that need to be made is 100,000 * 50,000,000 = 5,000,000,000,000 (Five trillion!). This can be a time-consuming process when the tables are large and the comparisons need to be performed on multiple columns. This becomes exacerbated if the metrics require continuous recalculations as is the case with near real-time analytics to support fleet management, cyber-security, network optimization, real-time trading, and other time-critical services.

Extreme cardinality joins often require a full table scan. A full table scan occurs when a database reads and processes the entire contents of a table in order to find the data that matches the specified criteria for a query. This can be a time-consuming and resource-intensive operation that can cause the database to become slow or unresponsive. Additionally, full table scans can be memory intensive because they may require temporary tables or other data structures to store the intermediate results of the comparisons. This can increase the amount of memory needed to perform the join, which can further impact the performance of the operation. Further, full table scans can interfere with other operations that are running on the database. For example, if the database is executing multiple queries at the same time, a full table scan can consume a large amount of CPU, memory, and I/O resources, which can cause other queries to run slower or fail.

In some cases, it may be possible to avoid a full table scan by using appropriate optimization techniques, but in other cases a full table scan may be inevitable.

The traditional solution

The traditional approach taken by teams that provision the data analytics platform is to ask the question, “How do we avoid full table scans?”

There are several ways to avoid full table scans when doing analytics in a database. One way is to use indexes to speed up data access. Indexes are data structures that are created on specific columns or combinations of columns in a table. They can be used to quickly locate and retrieve data from a table without needing to scan the entire table. By creating appropriate indexes on a table, you can often avoid full table scans and improve the performance of your queries.

Another way to avoid full table scans is to use partitioning. Partitioning allows you to split a table into smaller, more manageable pieces, each of which can be stored and accessed independently. This can make it easier to manage large tables and can allow you to perform queries and analytics on a smaller subset of the data, rather than scanning the entire table.

An additional technique to avoid full table scans is to use filters and predicates to narrow down the data that is being accessed. By using filters and predicates, you can specify exactly which rows in a table you want to include or exclude in your query. This can help to reduce the amount of data that needs to be scanned and can improve the performance of your queries.

Finally, pre-processing the data to remove any unnecessary or redundant values in the join columns can accelerate query speeds and reduce resources required, but at the expense of data engineering costs and adding latency from the time data is created until insights are derived.

The problem with all these techniques is that they were created to avoid full table scans where the cardinality is low or moderate. However, when the cardinality is extreme, these techniques offer little benefits because the data doesn’t conform to the sub-dividing that is the entire premise of the approach.

A Better Way

The modern approach to solve issues with high cardinality data analytics asks the question, “How can we make full table scans more efficient?”

Making full table scans efficient involves representing data as vectors (arrays of numbers) and then using mathematical operations on those vectors to solve problems. This approach to boost performance has become known in the industry as vectorization or data level parallelism.

In a vectorized query engine, data is stored in fixed-size blocks called vectors, and query operations are performed on these vectors in parallel, rather than on individual data elements. Algorithms go from operating on a single value at a time to operating on a set of values at a time. In other words, it is the ability to do a single mathematical operation on a list (or “vector”) of numbers in one single step. This allows the query engine to process multiple data elements simultaneously, making full table scans orders of magnitude faster.

Kinetica’s fully-vectorized database significantly outperforms traditional cloud databases for big data analytics, by harnessing data-level parallelism using the built-in Advanced Vector Extensions (AVX-512) of our latest 3rd Gen Intel® Xeon® Scalable processors.

Jeremy Rader, GM, Enterprise Strategy & Solutions for the Data Platforms Group at Intel

In contrast, conventional distributed analytic databases process data on a row-by-row basis within each node, which is ultimately slower and requires more computational resources. Kinetica takes advantage of advances in GPUs and vectorized CPUs to process data in large blocks, allowing them to execute queries more quickly and efficiently. This can significantly reducethe amount of time and resources for joins on large amounts of high cardinality data.

“Kinetica enables enterprises to easily speed up the databases that power their work. Across multiple industries, building intelligent, AI-powered applications with access to up-to-date time series and location data is becoming key to success. Kinetica has a long history of working with NVIDIA to optimize their real-time analytics database with accelerated computing.”

Scott McClellan, Senior Director of Data Science and MLOps at NVIDIA.

Benchmarks show that fully vectorized query engines like Kinetica are 50X faster on average than other distributed analytic databases. If you work with large amounts of high cardinality data, Try Kinetica for Free to see how much faster your high cardinality queries run.

Making Sense of Sensor Data