Real-time Event Analytics at Scale

Kinetica delivers real-time insights from massive data streams—whether from IoT sensors, financial transactions, or web traffic. Our vectorized compute engine can ingest and query data simultaneously, powering critical use cases like threat detection in US Airspace, fraud prevention in financial systems, and monitoring the performance of Formula E race cars. Kinetica’s ability to execute complex queries on real-time data enables our customers to make faster, smarter decisions.

Spatial Analytics on Steroids

Points and shapes are native geospatial objects in Kinetica and can be expressed as WKT. Tracks are another native geospatial object type that can be generated from sequential data to represent the paths objects take as they move.

Over 130 high-performance geospatial functions are available with SQL or using the REST API. Functions include tools to filter, compare or aggregate data by area, by track, or custom shape.

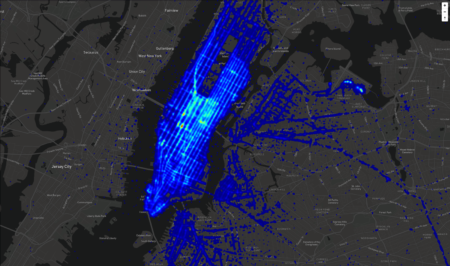

Entity Tracking

Entity tracking is a key capability of logistics, supply chain management and national security. Organizations like Penske and US Federal Aviation Administration use Kinetica to leverage millions of real-time records every second to track entities through time and space.

Alerts when objects dwell or loiter.

Identify when objects are dwelling, or loiting. SQL expressions make it easy to set up alerts and identify problems or questionable activity.

What objects pass through an area?

Use the ST_TRACKINTERSECTS function to determining whether a track intersects with a geofenced area.

When two tracks come close

Spot close calls. The ST_DWITHIN function can be used to detect when a tracks are within a particular distance and time from each other.

Moving tracks passing through changing areas

Spatial joins in Kinetica are fast. Perform complex real-time analysis on weather systems and airplanes as they move across space and time

High Performance on Time-Series and Spatial Joins

Questions asked of time-series and spatial datasets are typically compute intensive. Kinetica’s vectorized architecture is optimized for running spatial joins on high-cardinality data with inexact keys.

Work with Time-Series Data with Ease

Window functions and AS-OF joins enable you to make sense of events as they evolve.

Even with Unknown Questions

Window Functions in Kinetica allow you to apply aggregate and ranking functions over a period of time, and keep the picture updated as data evolves. —

Kinetica’s ASOF joins allow you to join data from multiple tables when the timestamps don’t exactly match. This makes it easier to understand what’s happening even when there aren’t exact matches on timestamps from the two tables.

Blog: ASOF joins in Kinetica

Try Kinetica Now:

Kinetica Cloud is free for projects up to 10GB

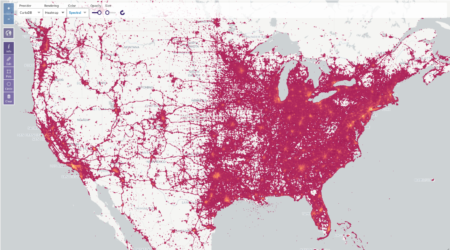

Server-side Visualizations

For almost-instant visualizations of large geospatial datasets, Kinetica is able to generate sophisticated WMS map overlays directly on the server

One of the core challenges with displaying spatial information is moving data from the database layer to the visualization layer. Serializing and moving millions to billions of objects from one technology to another takes time.

Kinetica is able to solve this bottleneck by generating geospatial tiles directly on the server through a Web Mapping Service (WMS). WMS tiles can be used as overlays on top of a map with applications such as ESRI and Mapbox, Leaflet and others.

ESRI & Kinetica »

Web Mapping Service »

Tech Talk: When your application is a Map

Billions of Points...

Display unlimited points on a map with interactice query. While noisy, this provides a unique means to explore volumes of data that is not possible when generated by the front-end alone.

Heatmaps...

Heatmaps take the noise out of large datasets to show patterns and nodes of maximum usage. Hatmaps generated in-database are another efficient.

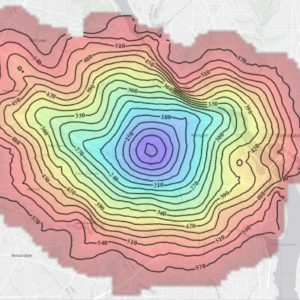

Isochrones...

Isolines represent curves of equal cost, with cost often referring to the time or distance from a starting point. Isochrones work with Kinetica’s Graph functionality to visualize distances.

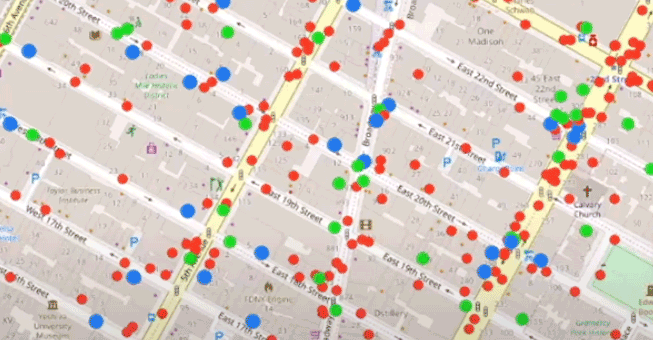

Class Break...

A class break rendering enables you to take data from one or more tables and apply styling on a per-class basis.

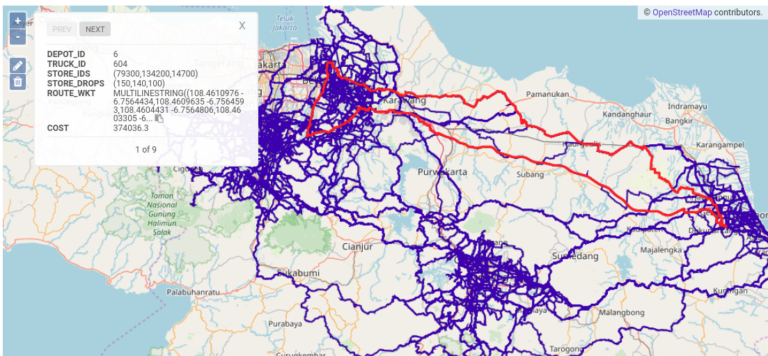

Leverage graphs for optimal routes or for network planning

Kinetica’s graph API enables you to model spatial data as graphs. Then solve difficult questions using SQL queries, or with in-built graph solvers. Outputs from solvers can be piped directly to maps.

Property Queries

Find hidden relationships in your data instantaneously. The adjacency query engine is capable of traversing millions of graph nodes in many-to-many fashion with performance at scale.

Solvers

What is the shortest path from point A to point B, factoring in speed limits, traffic, and other restrictions? Or figure out the best route to visit multiple destinations. Kinetica comes with a suite of graph solving features to make this easy.

Map Matching

Match data to networks. You can use Kinetica’s map matching tools to determine roadways and paths from noisy GPS data.

Learn more about Kinetica’s Graph Capabilities

Connect to your choice of tools

Kinetica plays well with a wide variety of BI and GIS tools. Or you can work with data through Kinetica Workbench and Kinetica Reveal.

BI & Observability Tools

With Kinetica’s implementation of the Postgres Wireline protocol, popular observability and BI tools including Grafana, Prometheus, Datadog, Lookr and others can monitor and integrate directly with Kinetica.

ESRI ArcGIS

Data in Kinetica can be made available to ArcGIS Insights through ArcGIS JDBC connector and through the ArcGIS Python API, and visualizations passed back through WMS tiles. This enables ArcGIS to be used with streaming location data at scale.

Tableau

Kinetica’s native extension for Tableau allows for geospatial processing to be done in-database with visulizations delivered back to Tableau through the WMS endpoint.

Custom Applications

Kinetica’s REST API with language specific implementations for Python, Javascript, Java, C++, C# and others enable flexible integration with custom applications and including ESRIs ArcGIS tools.

Kinetica Reveal - Native BI Framework

Kinetica comes with Reveal — a web-based BI framework that makes it quick and easy to start exploring geospatial data. Reveal also connects with Kinetica’s geospatial pipeline for advanced mapping and interactive location-based analytics.

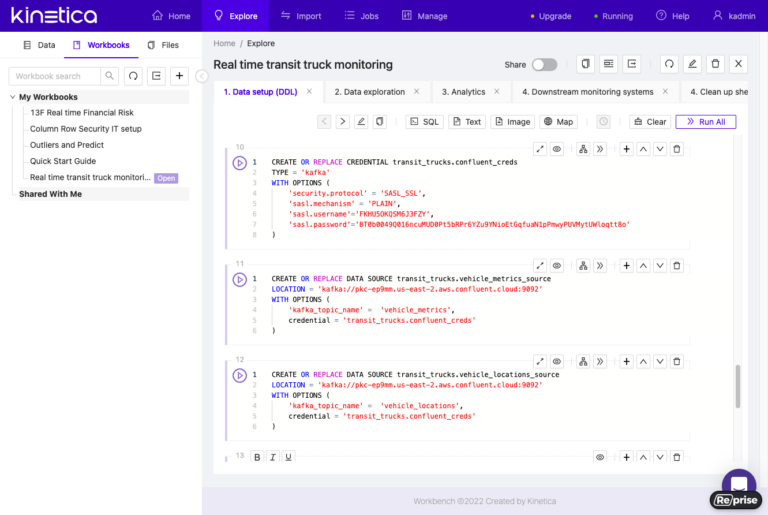

Kinetica Workbench

Kinetica Workbench is a sophisticated, yet intuitive interface, to interactively explore data, organize and store SQL workbooks, import and export data streams, and for general database administration.

Recent Webinar:

Advanced Analytics and Machine Learning with Geospatial Data

Related Content

Build Real-time Location Applications on Massive Datasets

Vectorization opens the door for fast analysis of large geospatial datasets