I think one of the most important challenges for organizations today is to use the data they already have more effectively, in order to better understand their current situation, risks, and opportunities. Modern organizations accumulate vast amounts of data, but they often fail to take full advantage of it because they struggle finding the right skilled resources to analyze it that would unlock critical insights.

Kinetica provides a single platform that can perform complex and fast analysis on large amounts of data with a wide variety of analysis tools. This, I believe, makes Kinetica well-positioned for data analytics. However, many analysis tools are only available to users who possess the requisite programming skills. Among these, SQL is one of the most powerful and yet it can be a bottleneck for executives and analysts who find themselves relying on their technical teams to write the queries and process the reports.

Given these challenges Nima Neghaban and I saw an opportunity for AI models to generate SQL based on natural language, to give people immediate insights.

Where to begin

Initially we had no large language model (LLM) we could use, and I needed a proof of concept. I started with the OpenAI Playground, which provides tools for developing applications based on OpenAI and uses the ChatGPT LLM. With this, I was able to create a prompt that would generate SQL based on one of our sample schemas. Next, I worked with a UI developer to extend the Kinetica Workbench application so it could populate the prompt with the necessary schema details and send requests to the OpenAI API.

Our first release relied on the OpenAI LLM to generate SQL. We soon realized it had limitations, and many of our largest customers could not take full advantage of it. For example, many organizations can’t send proprietary data to a third party like OpenAI. In addition, the OpenAI models would often unexpectedly change results without explanation. There were many types of optimizations that would be helpful for efficient code generation, but they were not supported by OpenAI because it had not been optimized for generation of creative text. .

We understood that, to make this capability feasible for all our customers, we would need to develop a model that we had full control over.

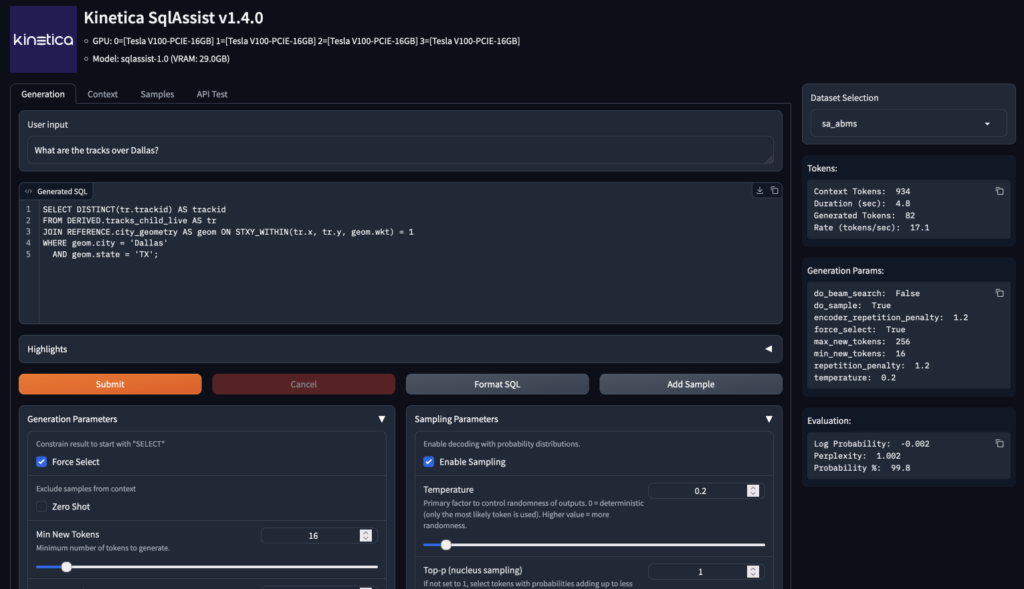

Thinking like the startup we still are, we acquired some GPU’s off eBay that I put to use for fine-tuning a model. I wanted a model that was specifically trained for SQL generation, so I used some techniques not available with OpenAI. There are custom tokens for identifying SQL context, user, and assistant sections of the prompt. The training data uses masks to allow the optimizer focus on solutions for the SQL generation while using the other sections as preconditions.ChatGPT uses a relatively simple algorithm for inferencing that is more useful for creative writing than code generation. For the Kinetica model, I chose a deterministic algorithm called beam search that evaluates multiple solutions before choosing the best one. I also created a custom UI for developing and testing prompts under different conditions of inferencing parameters. This helped root out problems, and gave me an understanding of what worked best.

Contexts in context

In my previous installment, I brought up the subject of the context — a unique concept that the Kinetica namespace treats as a first-class object. I offered an analogy where I compared an LLM’s context to a human brain’s short term memory. During inferencing we use a strategy called in-context learning that provides a means to to give an LLM extra instructions without the need to run a compute intensive fine-tuning process..

In the Kinetica model, a context is currently limited to about 8,000 tokens, with each token representing 3 or 4 characters. Future LLM architectures may be able to handle longer contexts, which would free them to learn more continuously. Right now, an LLM is usually suspended in a frozen state after training, and it has its contexts wiped after each inference session.

At Kinetica, we developed a SQL syntax residing within the database to represent the data that we load into the context during the inferencing process. Then I developed an API endpoint to service the inferencing request coming from the database. The end result is a generative AI capability integrated into our core database, with first-class objects manipulated like any other database object.

Touchy subjects

Some users expect magic from the LLM when they ask the model to generate SQL for questions for which it does not have the data. In many cases, the model is working on proprietary customer data that was nowhere in its training.

I like to compare this task to asking a programmer/analyst to write SQL based only on the information in the context. How likely would a human successfully write a SQL instruction, without having seen the schema or data? You may have had an experience with a new coworker where you assign them a task, and they tell you they don’t understand something, or there’s a piece of information missing somewhere. Sometimes their sentiment is justified.

An LLM is trained to always respond with the result with the highest probability of being correct. Because of this, it can’t give you direct feedback about how, why, or where it’s confused — say, for instance, “I don’t know how to join this table, because I am not sure which term is the foreign key.” Instead, we have to infer the nature of the problem an LLM is having, solely from the erroneous responses we receive.

When used correctly, the LLM can seem surprisingly smart about what it can figure out for itself. Still, you still need to be thoughtful about what it needs to know. I can recall a number of times when I got frustrated trying to make it do something, and then I realized I gave it an incorrect example.

For this reason, among others, training data needs to have high quality and be unambiguous. Some studies have shown that, if you train a model with high-quality curated data, you need only a small fraction of what would otherwise be required to achieve a desired level of performance. If a training sample gives an incorrect answer, the model can get confused when it tries to correlate that answer with other correct answers — which leads to performance degradation. And if the question/answer pair includes an ambiguous question, the model may confuse the response as the answer for a different, but similar, question.

There is also this notion that LLM multitasking can degrade performance. For example, if you ask ChatGPT to answer a question while doing something trivial like capitalizing every noun, it might give lower-quality answers. When a model generates SQL, we want to keep its attention on generating a query that will produce a correct result. When it divides its attention on how best to format the code, or it sees samples in its context showing a number of ways to do the same thing, then it gets distracted. So it’s good to consistently format samples, and avoid using different varieties of syntax when just one will suffice.

All this raises some very interesting questions about how we could enhance LLMs in the future. Right now, LLMs don’t really learn by doing, but they could if we integrated a process capable of generating new training data, based on feedback from human users. By indexing successful samples with embeddings, we could give the LLM its own long-term memory, so it could improve over time.