Financial risk calculations have become more difficult to assess every year, and it is essential to use new approaches such as the X-Value Adjustment (XVA), which is a generic term referring collectively to a number of different “Valuation Adjustments” in relation to derivative instruments held by banks. The purpose of these adjustments is to 1) hedge for losses that may arise from counterparty default, and 2) to help figure out the amount of capital required under Basel III, which is the regulatory framework on bank capital adequacy, stress testing, and market liquidity risk.

Valuation adjustments including the following:

- CVA – This is the most widely known and well understood of the XVA. It adjusts for the impact of counterparty credit risk.

- DVA – The benefit a bank derives in the event of its own default.

- FVA – The funding cost of uncollateralized derivatives about the “risk-free rate.”

- KVA – The cost of holding regulatory capital as a result of the derivative position.

- MVA – Refers to the costs of centrally cleared transactions, such as adjustments for the initial margin and variation margin.

To put it simply, XVA is about computing potential risk, now and in the future. It reflects the capital that a trade consumes over its lifetime, which can either be a source of cost or benefit. The goal of XVA is to insulate banks from risk where possible and allocate the right capital.

Why is XVA Needed?

Prior to 2007, trades were cleared at fair valuations. The costs for capital and collateral were essentially irrelevant to banks’ investment decisions. All trading was done without any real understanding of the value of a trade in the future; there was very little modeling taking place. This led to increasing complex investment portfolios of variable value, and ultimately, to the stock market crash in 2008.

Following the crash, major reform took place in the financial industry. The Basel Accord was put together to make sure that the banks could model the data and analyze the trades effectively, and store the data to measure their exposure at all times. Banks had to make sure that the trades they were conducting could be capitalized. This requirement represents a massive drag for a bank, as it can result in additional expense and reduced profitability. In order for banks to operate efficiently, play by the rules, and keep the regulators happy, banks needed 1) lots of data, 2) clever forecasting models, and 3) a huge amount of computational horsepower to be able to compute XVA as the trade occurred, while also re-assessing the bank’s position if there was a major fluctuation in the markets.

XVA Challenges

Real-time, pre-trade XVA measures are extremely complex. They require an enormous amount of compute power to handle the data being generated. To compute risk, banks have to continually and comprehensively measure trading activity and currency movements. They must compute the entire cost of trading, and re-compute if market conditions change significantly. Banks have to take into account these many different risk factors using a mix of real-time and batch/static data.

Before GPUs made it into the mainstream, banks had to rely on large numbers of CPUs. Batch processing simply wasn’t up to the challenge, as banks had to compute risk on hundreds of thousands or millions of trades per day, with thousands of counterparties in over 20 different currencies.

GPUs to the Rescue

These types of calculations are extremely complex and time-critical; CPUs just don’t have the processing power to handle the workloads in real time. In addition, banks have to retain and service the data to traders in real time via a UI for flexible data slicing and drilling. Fortunately, compute-intensive workloads like real-time adjustment calculations are perfect for high-performance GPUs, which have over 5,000 cores. For example, running a Monte Carlo simulation, which is used to generate random variables for modeling risk, is very compute heavy and much better suited to GPUs than CPUs.

Life After Moore’s Law

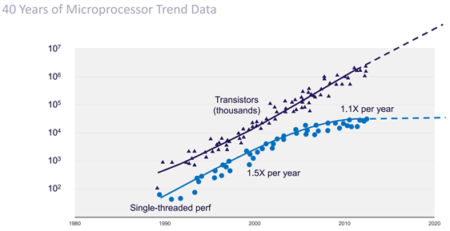

Moore’s Law says that the number of transistors that can be packed into a given unit of space will roughly double every two years. If you want to take advantage of all of these transistors, you need to do things in parallel. You cannot expect to do things in a single threaded way and expect to get the desired results.

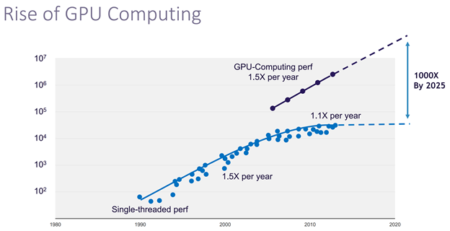

Rise of GPU Computing

The chart above depicts the enormous processing potential enabled by GPUs. As Moore’s Law tails off, GPUs are picking up and filling the gap, providing solutions like Kinetica with a lot of valuable horsepower. Since Kinetica leverages GPUs and in-memory processing, banks can now perform risk simulation computations in real time on data as it streams in when leveraging Kinetica’s insight engine for the workload.

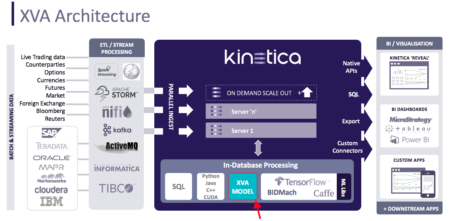

XVA Architecture

Here’s an example of Kinetica’s XVA architecture that is used at a major multinational bank:

Kinetica Brings Advanced Analytics and Machine Learning to Finance

Kinetica provides a bridge to bring advanced analytics and machine learning into financial analysis. Financial institutions are merging more and more sources of data and need to run ever more sophisticated risk management algorithms and return results pre trade. Kinetica’s user-defined function API enables a next-generation risk management platform that provides real-time drill-down analytics, advanced machine learning, and on-demand custom XVA library execution. With Kinetica, banks and investment firms benefit from near-real-time tracking of their risk exposures, allowing them to monitor their capital requirements at all times.

To experience the power of Kinetica’s instant insight engine, download the trial edition today. And be sure to watch my colleague James Mesney’s talk from last year’s GPU Technology Conference in Munich: How GPUs Enable XVA Pricing and Risk Calculations for Risk Aggregation.