From 100K+ Lines of Microservices Code to Simple SQL

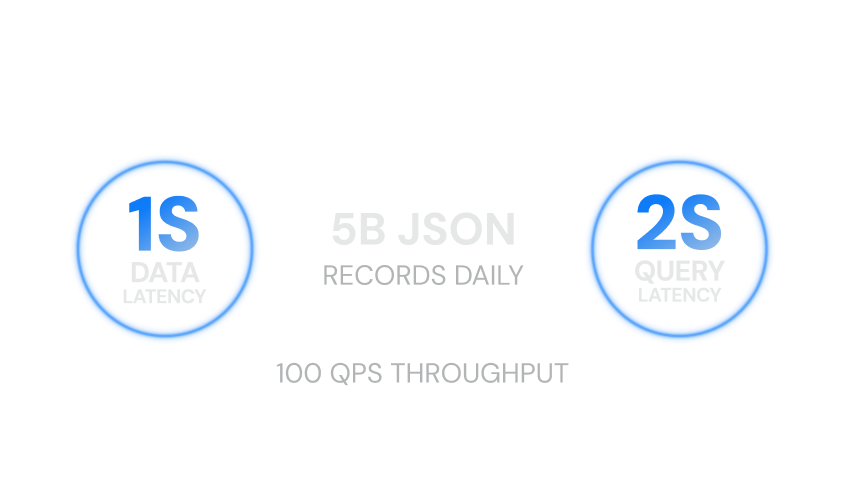

How a major hedge fund redefined real-time risk analytics — processing 5 billion JSON objects daily with sub-second latency and simplified architecture.

What Drove the Change

Delivering real-time, scalable risk analytics across billions of daily data points.

Scale Without Delay

The fund’s risk platform had to process billions of JSON objects daily while ensuring every update was instantly queryable — without data loss or performance lag.

Faster Metric Innovation

Each new risk metric required custom microservices, slowing development. The goal was to replace this complexity with a more agile, SQL-driven process.

Flexible Scalability

As data volumes exploded, the system struggled to maintain performance. A new architecture was required to scale seamlessly under continuous growth.

Instant, Complex Analytics

Traders and analysts needed to perform complex calculations against constantly changing datasets, demanding sub-second responses for better decision-making.

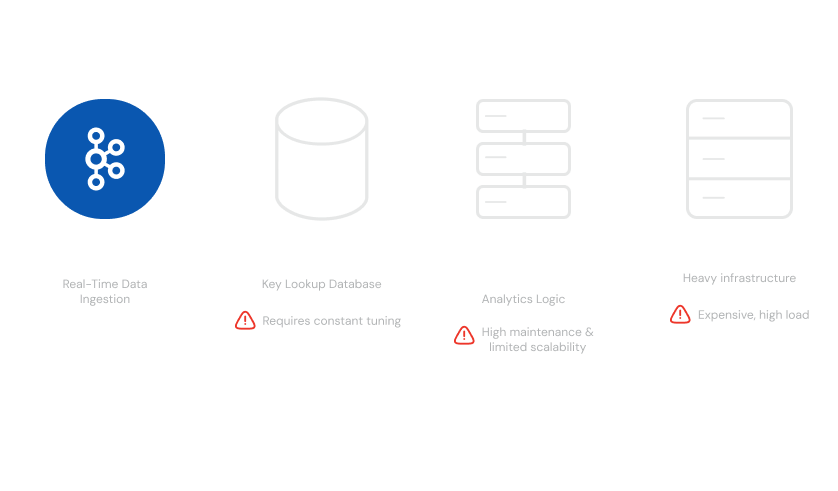

The Previous Architecture

A microservice-heavy setup struggling to keep up with real-time financial data demands.

The platform relied on a large Cassandra cluster serving as a simple key lookup system, designed for high-volume data ingestion from Kafka reaching up to two million wide records per minute. Each analytical function existed as a standalone microservice containing its own processing logic. To support this, a large pool of application servers hosted the microservices, but the architecture quickly became complex and expensive to maintain.

Bottlenecks in the Legacy Architecture

Ingestion Bottlenecks

Data ingestion from Kafka into Cassandra required constant tuning to handle surges, creating operational overhead.

Limited Analytics

Microservices could only process small portions of data, restricting the scope and depth of real-time insights.

High Development Overhead

Every new metric demanded a new microservice, driving up maintenance and slowing innovation.

Infrastructure Costs

The need for heavy-weight nodes to host containerized microservices led to significant infrastructure spending.

Real-Time Reinvented

Unified ingestion and analytics with GPU-accelerated performance.

With Kinetica, the hedge fund achieved seamless, high-speed ingestion and analytics in one platform. Kinetica’s native Kafka integration easily surpassed ingestion SLAs, averaging 1.3 million records per minute on a small AWS cluster.

Complex queries could now run directly against live datasets, enabling analysts to generate richer, real-time insights.

Most importantly, over 100,000 lines of microservice code were replaced with simple, flexible SQL queries — drastically reducing complexity and accelerating innovation.

Why Kinetica Outperformed Other Technologies

| Capability | Kinetica (AI-Powered, Real-Time Analytics) | Modern analytical databases ( ClickHouse, TimescaleDB, and StarRocks) | ||

|---|---|---|---|---|

Ingestion Speed

| 1.3M+ records/min effortlessly

|

Unable to meet necessary ingestion speeds; slower than Cassandra

| ||

Query Performance (during ingestion)

| Sub-second latency

|

Poor, especially for complex queries with JOINs

| ||

Kafka Integration

| Native Kafka consumption supported

|

No native Kafka consumption capability

| ||

Development Overhead

| Simplified to SQL queries

|

Higher; may require manual tuning or complex queries

| ||

Infrastructure Load

| Lean AWS footprint

|

Larger infrastructure footprint

| ||

Monitoring Effort

| Auto-optimized ingestion pipeline

|

Manual tuning often required

|

Talk to Us!

The best way to appreciate the possibilities that Kinetica brings to high-performance real-time analytics is to see it in action.

Contact us, and we’ll give you a tour of Kinetica. We can also help you get started using it with your own data, your own schemas and your own queries.